Types of Sum of Squares Calculation

The sum of squares calculation is an important tool in financial analysis. It helps to measure the variation or dispersion of data points in a dataset. There are different types of sum of squares calculations that can be used depending on the specific analysis being performed.

1. Within-Group Sum of Squares Calculation

2. Between-Group Sum of Squares Calculation

The between-group sum of squares is calculated by summing the squared differences between each group mean and the overall mean, weighted by the number of observations in each group.

3. Total Sum of Squares Calculation

The total sum of squares calculation measures the total variation in a dataset. It quantifies the differences between individual data points and the overall mean. The total sum of squares is calculated by summing the squared differences between each data point and the overall mean.

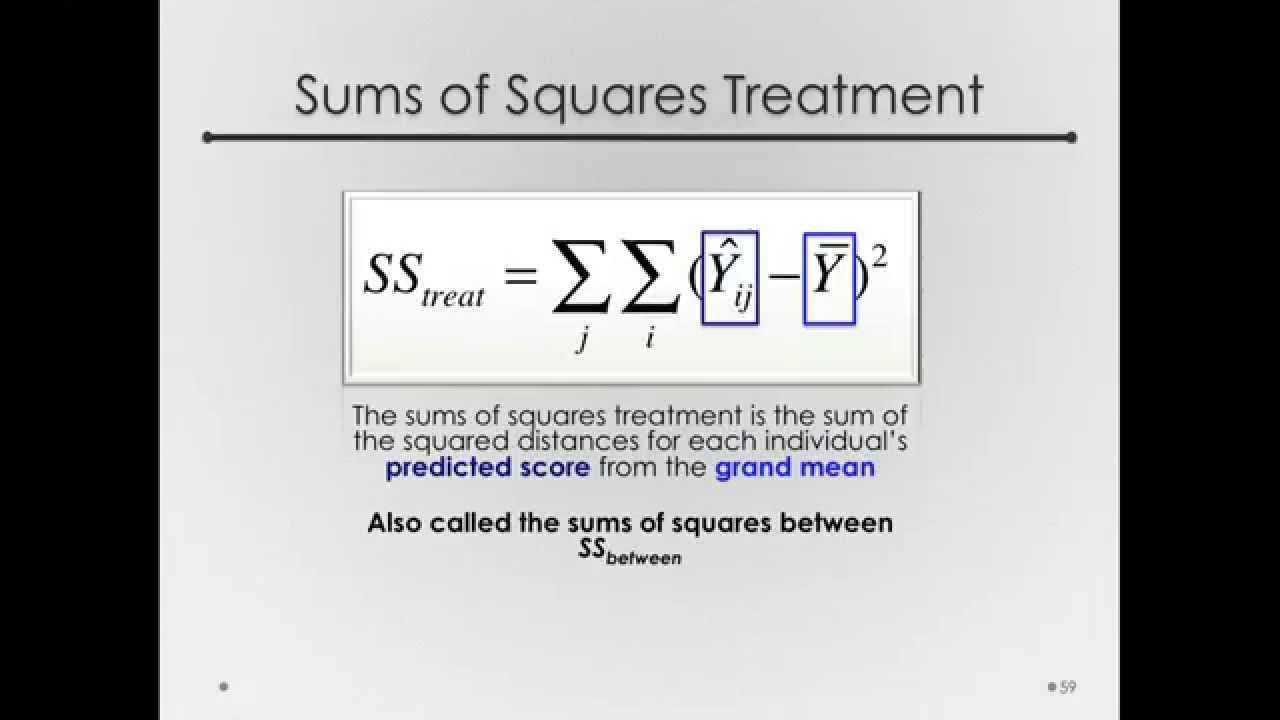

4. Regression Sum of Squares Calculation

The regression sum of squares calculation is used in regression analysis to measure the variation explained by the regression model. It quantifies the differences between the predicted values from the regression model and the overall mean. The regression sum of squares is calculated by summing the squared differences between the predicted values and the overall mean.

Overall, the different types of sum of squares calculations provide valuable insights into the variation and significance of data points in a dataset. They are essential tools in financial analysis and other statistical analyses.

Within-Group Sum of Squares Calculation

The within-group sum of squares calculation is a statistical method used to analyze the variability within different groups or categories of data. It is an important tool in various fields, including finance, economics, and social sciences.

To calculate the within-group sum of squares, the following steps are typically followed:

- First, the mean of each group is calculated. This involves finding the average value of the data within each group.

- Next, the deviation of each data point from its group mean is calculated. This is done by subtracting the group mean from each data point.

- The squared deviation for each data point is then calculated by squaring the deviation.

- Finally, the sum of the squared deviations for each group is calculated to obtain the within-group sum of squares.

The within-group sum of squares is an important component in various statistical analyses, such as analysis of variance (ANOVA) and regression analysis. It provides insights into the variability within different groups and helps to determine the significance of differences between groups.

| Group | Data Points | Group Mean | Deviation | Squared Deviation |

|---|---|---|---|---|

| Group 1 | 10, 12, 14 | 12 | -2, 0, 2 | 4, 0, 4 |

| Group 2 | 8, 9, 11 | 9.33 | -1.33, -0.33, 1.67 | 1.77, 0.11, 2.78 |

| Group 3 | 7, 9, 10 | 8.67 | -1.67, 0.33, 1.33 | 2.78, 0.11, 1.77 |

In the above example, we have three groups with different data points. We calculate the mean for each group and then calculate the deviation of each data point from its group mean. The squared deviation is then calculated, and the sum of squared deviations for each group is obtained. This gives us the within-group sum of squares.

By analyzing the within-group sum of squares, we can determine the variability within each group and assess the significance of differences between the groups. This information is valuable for making informed decisions and drawing conclusions based on the data.

Between-Group Sum of Squares Calculation

The between-group sum of squares calculation is a statistical method used in financial analysis to measure the variation between different groups or categories. It is often used in the analysis of variance (ANOVA) to determine the significance of differences between groups.

To calculate the between-group sum of squares, you first need to calculate the overall mean of the data set. Then, for each group, calculate the mean of that group. The difference between each group mean and the overall mean is squared, and the squared differences are summed up for all groups.

The between-group sum of squares represents the amount of variation in the data that can be attributed to the differences between groups. A larger between-group sum of squares indicates a greater difference between groups, while a smaller sum of squares suggests that the groups are similar.

This calculation is useful in financial analysis because it allows analysts to determine whether there are significant differences between groups, such as different investment strategies or market segments. By comparing the between-group sum of squares to the within-group sum of squares, analysts can assess the impact of different factors on the overall variation in the data.

For example, suppose you are analyzing the performance of three different investment portfolios. You calculate the between-group sum of squares and find that it is significantly larger than the within-group sum of squares. This suggests that the performance of the portfolios is significantly different from each other, indicating that the choice of investment strategy has a significant impact on the overall variation in returns.

Total Sum of Squares Calculation

The total sum of squares (TSS) is a statistical measure used in financial analysis to determine the total variation in a dataset. It represents the sum of the squared differences between each data point and the overall mean. The TSS is an important component in various statistical calculations, such as analysis of variance (ANOVA) and regression analysis.

To calculate the TSS, you first need to calculate the mean of the dataset. Then, for each data point, subtract the mean and square the result. Finally, sum up all the squared differences to get the TSS.

The TSS can be represented mathematically as:

Where:

- TSS is the total sum of squares

- Σ represents the summation symbol, indicating that you need to sum up all the squared differences

- xi is each data point in the dataset

- x̄ is the mean of the dataset

The TSS provides a measure of the total variability in the dataset. It represents the sum of the squared differences between each data point and the overall mean, regardless of any grouping or regression analysis. The TSS is often used as a baseline for comparing the variability explained by other factors, such as within-group or between-group variability.

For example, in a financial analysis scenario, you may want to determine the total variation in stock prices over a certain period. By calculating the TSS, you can assess the overall volatility in the market and compare it to other factors, such as industry-specific or company-specific volatility.

Regression Sum of Squares Calculation

To calculate the regression sum of squares, the following steps are typically followed:

- Fit the regression model to the data.

- Calculate the predicted values of the dependent variable based on the regression model.

- Calculate the mean of the dependent variable.

- Subtract the mean of the dependent variable from each predicted value to obtain the deviation from the mean.

- Square each deviation from the mean.

- Sum up all the squared deviations from the mean to obtain the regression sum of squares.

The regression sum of squares represents the amount of variation in the dependent variable that is explained by the regression model. It is a measure of how well the regression model fits the data and can be used to assess the goodness of fit of the model. A higher regression sum of squares indicates a better fit, while a lower sum of squares suggests that the model does not explain much of the variation in the dependent variable.

Emily Bibb simplifies finance through bestselling books and articles, bridging complex concepts for everyday understanding. Engaging audiences via social media, she shares insights for financial success. Active in seminars and philanthropy, Bibb aims to create a more financially informed society, driven by her passion for empowering others.