What is Homoskedasticity?

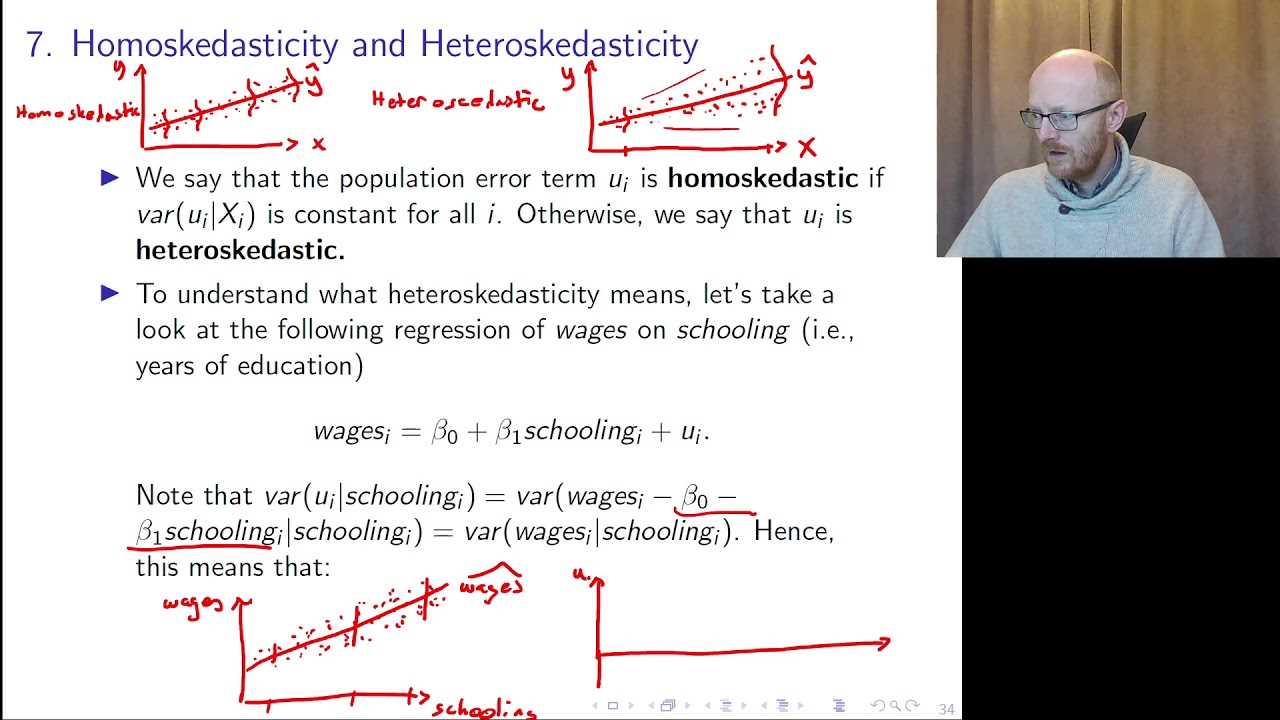

Homoskedasticity is a term used in regression modeling to describe a situation where the variability of the errors, or residuals, is constant across all levels of the independent variables. In other words, it means that the spread of the residuals is the same for all values of the predictor variables.

When homoskedasticity is present, it indicates that the relationship between the independent variables and the dependent variable is consistent and reliable. This is important because it allows for accurate predictions and valid statistical inferences to be made from the regression model.

On the other hand, when heteroskedasticity is present, it means that the variability of the errors is not constant across all levels of the independent variables. This can lead to biased estimates, inefficient parameter estimates, and incorrect hypothesis tests.

There are several ways to detect homoskedasticity in regression modeling. One common method is to plot the residuals against the predicted values or the independent variables. If the scatter plot of the residuals shows a random pattern with no clear relationship to the predicted values or independent variables, then homoskedasticity is likely present.

Overall, homoskedasticity is an important assumption in regression modeling. It ensures that the relationship between the independent variables and the dependent variable is consistent and reliable. Detecting and addressing heteroskedasticity is crucial for obtaining accurate and valid results from regression analysis.

Example of Homoskedasticity in Regression Modeling

Homoskedasticity is an important assumption in regression modeling. It refers to the condition where the variance of the error term is constant across all levels of the independent variables. In other words, it means that the spread of the residuals is the same for all values of the predictors.

When there is homoskedasticity, it indicates that the relationship between the independent variables and the dependent variable is consistent and predictable. This allows for more accurate and reliable predictions and inferences.

Let’s consider an example to better understand homoskedasticity in regression modeling. Suppose we want to examine the relationship between the number of hours studied and the exam scores of a group of students. We collect data on the number of hours studied (independent variable) and the corresponding exam scores (dependent variable) for a sample of students.

We then perform a regression analysis to determine the relationship between the two variables. After running the regression, we obtain the residuals, which are the differences between the actual exam scores and the predicted exam scores based on the regression equation.

If there is homoskedasticity, the plot of the residuals against the predicted exam scores will show a random scatter of points around zero, with no discernible pattern or trend. This indicates that the variance of the residuals is constant across all levels of the predicted exam scores.

On the other hand, if there is heteroskedasticity, the plot of the residuals will exhibit a clear pattern or trend, indicating that the variance of the residuals is not constant. This can lead to biased and inefficient estimates, as well as incorrect inferences.

Emily Bibb simplifies finance through bestselling books and articles, bridging complex concepts for everyday understanding. Engaging audiences via social media, she shares insights for financial success. Active in seminars and philanthropy, Bibb aims to create a more financially informed society, driven by her passion for empowering others.